The Importance of Backlinks In SEO: Past and Present

Using links to determine the popularity and quality of a site has long been a cornerstone of Google’s ever-popular search engine. While earlier search engines depended mostly on onsite keywords when ranking webpages, Google co-founders Larry Page and Sergey Brin (then Ph.D. students at Stanford University) knew that keywords could too easily be manipulated to influence site rankings. Indeed, a problem facing early search engines was the practice of webmasters stuffing inappropriate keywords into their site pages; in order to rank for a particular term, such as ‘belize resorts,’ all a webmaster had to do was repeat the term over and over again in the site’s metadata or onsite text, with little or no variation, which in many cases would make the on-page text barely legible to site visitors. By doing this, a site that had nothing to do with ‘belize resorts’ could easily rank highly for that term! Therefore, while doing research for a paper at Stanford, Larry Page headed the authorship of a search engine algorithm that would also count the number of times a site was linked to from other places on the web. His rationale was that each link constituted an implicit recommendation of a site; that is, if someone shared a link to a site, they were saying that they liked or approved of the site’s content and the business or person that it represented. The algorithm was named “Page Rank,” (after Larry Page) and it was expressed as a numerical value between 1 and 10, with ten being the greatest indicator of site quality. This notion that incoming links were a good method of scoring sites was one of the premises on which Google Inc. was founded in 1998. The rest was history, with other search engines following suit and sites tumbling out of the SERPs (search engine results pages) because their keyword-packed pages no longer mattered. In the years that followed, the page rank system took increasing precedence in the strategy to evaluate websites for quality.

The road to ensuring the quality of search results has not been without further hiccups, however. Webmasters soon discovered they could inflate the page rank value of their sites by placing massive amounts of external links around the web and pointing them back to their sites. ‘Link farms’ began popping up all over the place – these were websites that were entirely dedicated to hosting outbound links. These link farms could generate large amounts of revenue by charging fees for their services, which many site owners were willing to pay to ensure good rankings. Chaos threatened to ensue, with people buying and trading links left, right, and center. Two entirely unrelated companies (an Alaskan casino and a dental firm in Germany, for instance) could be found swapping links to each other; in what way would a casino located all the way in Alaska be qualified to ‘endorse’ the content of a site belonging to a German dental firm? The two sites aren’t even in the same language! This was not good for the search experience, since search results were likely to be dominated by sites that were able to game the system, instead of those that were relevant to what the user was searching for. To combat these new practices, dubbed ‘black hat link building,’ Google implemented Penguin, an update to its search engine algorithm that sniffed out those spammy links and penalized websites that were involved.

An Example of a Link Farm

The overall Google algorithm is extremely complex and therefore difficult to understand; however, we do know that their Penguin component does the following:

- It identifies ‘link farms’ that send out hundreds of thousands of links to unrelated websites and discounts those ‘unnatural’ links in its page rank system. It penalizes the websites to which those links point by reducing their search engine rankings.

- It evaluates each occurrence of a link to a site and determines if the context of the placement is natural; it looks at anchor text (a group of words containing a hyperlink), for example, and determines if the phrase or term used is related to the link itself. It also looks at the way anchor text fits in with the rest of text on the page; anchor text that is repeated an unnatural number of times within a site, for instance, is discounted as spam. It is important, therefore, to vary the phrases that you use when hyperlinking.

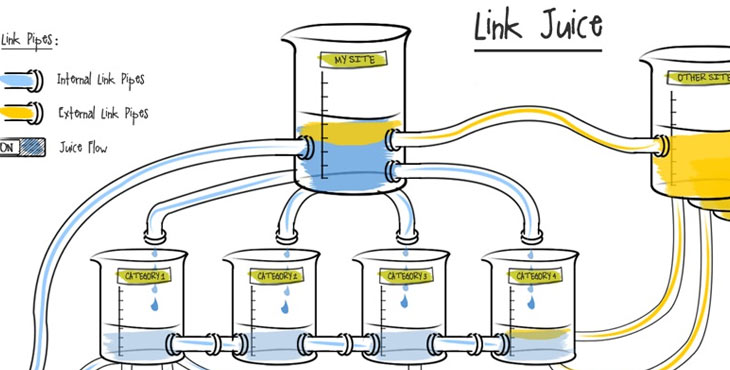

- Just as you would highly value recommendations from reputable, qualified people, so too does Google give greater consideration to links from reputable sources in its page rank system. It’s important, therefore, to get links from sites related to your own that have good page rank themselves; the higher their rank in Google’s system, the more their ‘vote’ for your site will count. A link from the US embassy’s site, which has a page rank of 6, for example, would be good for your site’s rankings if it fits in a relevant context.

So, then, with Google’s cutting-edge means of finding spammy links and torpedoing those sites that engage in suspicious link behavior, what best practices should you consider for your site? Page One Power, a reputable link building firm in the US, recently completed a study on how Google uses links today to rank web pages. Some of their more important findings included the following:

- Relevancy is key – it’s crucial to get links from sites that are related to your own, and to use those links in a manner that makes sense and that is as natural as possible.

- Quantity of links does not matter so much – there are many sites out there with a huge number of backlinks. However, in many cases it is only a fraction of them that Google considers valid and counts in its ranking system. The quality of links is what really matters – a site’s rankings may be improved considerably with as few as 15 good links.

- Fresh links matter – it’s important to keep getting new links to your site in order to improve and even keep your search engine rankings. New links are Google’s way of determining that your site is still useful and relevant to site visitors.

All this stresses the importance of ‘White Hat’ link building today – that is, link building that complies with Google’s rules for ensuring site quality. This kind of link building, by all indications, has to be a manual process, one that may be laborious and time-consuming but that will keep your site’s rankings from plummeting by ensuring its good standing with the search engines in the long run.

Thoughts and questions? Want to find out more about link building? Contact us directly.